#8 [Breaking] GPT-4 Launched!

OpenAI just announced the launch of GPT-4 which can handle both text, and image data. Here's everything we know.. (so far)

Hey, sorry for breaking from the routine, but something BIG just happened and we could not wait till Friday to share our excitement with you all.

OpenAI announced the launch of GPT-4 🎉

(and it can handle both, text and images)

What’s in store-

Everything we know.. (so far)

What can GPT-4 do?

What can GPT-4 not do? (limitations)

Everything we know.. (so far)

FEATURES

GPT-4 is a multimodal model - that accepts both image and text inputs, and emits text outputs - enabling tasks such as caption generation and classification.

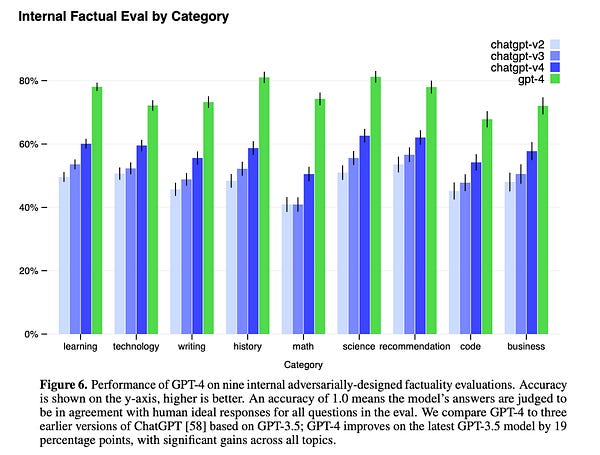

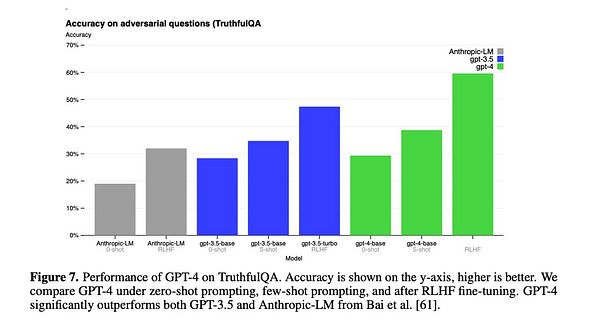

GPT-4 can solve difficult problems with greater accuracy, thanks to its broader general knowledge and problem-solving abilities.

GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5. It surpasses ChatGPT in its advanced reasoning capabilities.

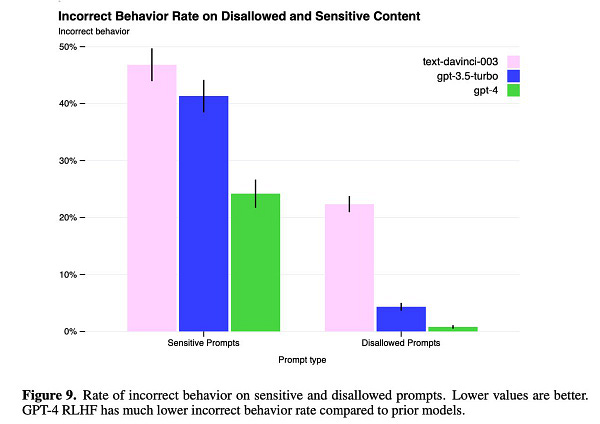

GPT-4 is safer and more aligned. It is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.

GPT-4 still has many known limitations that OpenAI is working to address, such as social biases, hallucinations, and adversarial prompts.

AVAILABILITY [API waitlist]

GPT-4 is available on ChatGPT Plus and as an API for developers to build applications and services. (API- waitlist right now)

Duolingo, Khan Academy, Stripe, Be My Eyes, and Mem amongst others are already using it.

PRICING (seems too steep to us) [link]

GPT-4 with an 8K context window (about 13 pages of text) will cost $0.03 per 1K prompt tokens, and $0.06 per 1K completion tokens.

GPT-4-32k with a 32K context window (about 52 pages of text) will cost $0.06 per 1K prompt tokens, and $0.12 per 1K completion tokens.

What can GPT-4 do?

More context- 32K tokens = ~25K words = ~52 pages of text

What can GPT-4 not do? (limitations)

GPT-4 has similar limitations as earlier GPT models. Most importantly, it still is not fully reliable (it “hallucinates” facts and makes reasoning errors). Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of specific applications.

GPT-4 generally lacks knowledge of events that have occurred after September 2021 which is when the vast majority of its pre-training data cuts off, and the model does not learn from its experience.

It can sometimes make simple reasoning errors that do not seem to comport with competence across so many domains or be overly gullible in accepting obviously false statements from a user.

It can fail at hard problems the same way humans do, such as introducing security vulnerabilities into the code it produces.

GPT-4 can also be confidently wrong in its predictions, not taking care to double-check work when it’s likely to make a mistake.

GPT-4 has various biases in its outputs that OpenAI has taken efforts to correct but which will take some time to fully characterize and manage.

GPT-4 represents a significant step towards broadly useful and safely deployed AI systems.

More to follow in our weekly edition, stay tuned.

How are Khan Academy, Duolingo, the Government of Iceland, and some more cool companies using GPT-4?

How much better is GPT-4 than GPT-3?

And, is GPT-4 worth it?

We hope you liked our coverage of GPT-4. As always we cannot stress more how eager we are to hear from you. Your remarks, insights, and feedback would be super helpful, so please tell us what you liked, or what you didn’t.

And, do tell your friends about us.

See you in the next couple of days.